Mastering Claude Code: A Guide to Skill-Based Architecture

Published: 2026-01-08

Mastering Claude Code: A Guide to Skill-Based Architecture

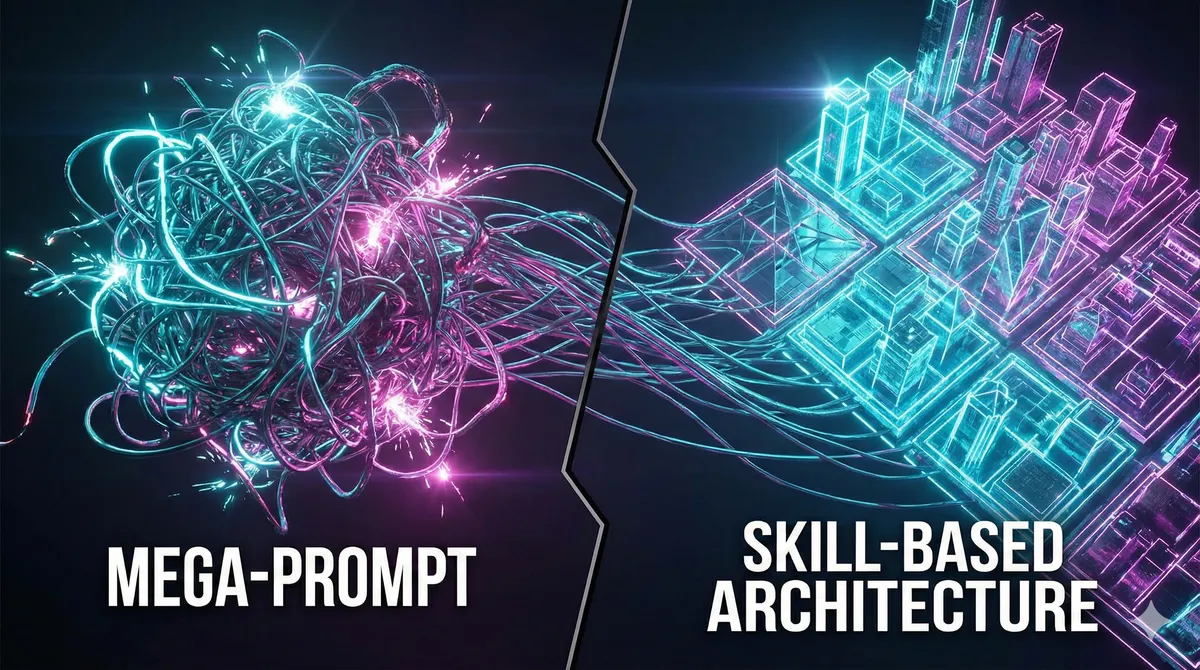

Moving from Chaotic Queries to Modular Architecture

Have you ever asked AI to design a system, only to receive a "wall of text" and 1000 lines of code based on a single flawed assumption from the beginning of the conversation?

This is the classic "hallucination in a vacuum" problem.

The traditional approach to prompting (one big query → one big response) is slowly becoming obsolete in favor of a Skill-based Architecture. In today's post, we'll analyze why a modular approach works better and how to design tools yourself that transform chaotic exchanges into precise engineering.

Why Does the "Skill-Based Approach" Work? 4 Pillars of Effectiveness

Analyzing the best-performing skills (such as those used for system architecture brainstorming), we can identify four key mechanisms that determine their success:

1. Minimizing Cognitive Load

Good AI shouldn't bombard you with questions like "describe the entire system in detail." Instead, an effective skill enforces asking one question at a time, preferring multiple choice.

Advantage: People respond faster and more willingly to short, specific questions.

Safety: If AI misunderstands one small fragment, you correct it immediately instead of fixing a complete, flawed design.

2. "Incremental Validation" Architecture

Instead of generating entire documentation at once, the skill divides the presentation into small sections (200–300 words) and after each one asks: "Does this look good?"

This is a fundamental difference. It prevents building complex structures on foundations of sand. If the first section is wrong, AI doesn't waste tokens (and your time) generating the next five.

3. Exploration Phase (Variant Thinking)

The biggest sin of working with LLMs? Accepting the first solution the model "spits out."

Good skills enforce an Exploration Phase – AI must propose 2–3 approaches and analyze their trade-offs. This forces the model to go beyond the simplest statistical path and builds user trust, who sees that AI is considering costs and complexity.

4. Modularity and "Skill Chaining"

A skill isn't a Swiss Army knife for everything. If a skill is for design, then after completing its work it should "pass the baton" to another module, such as one responsible for deployment planning or Git management.

This maintains logical clarity – one tool does one thing, but does it perfectly.

How to Build Your Own Skills? (Guide/Blueprint)

Want to create your own LLM automation tool? Here's a set of rules worth implementing in your system prompts:

I. Define Clear Temporal and Logical Boundaries

A skill must know when to "enter the game" and when to remain dormant.

Principle: Each skill must have a clear entry point (e.g., raw idea) and exit point (e.g., approved plan). Use when_to_use sections in prompts so the model knows in which situations to activate a given procedure.

II. Design for "Feedback Loops"

The creative process is rarely a straight line.

Principle: Your skill should contain instructions like "When to Revisit Earlier Phases." If halfway through design you change your mind about the database, AI must know to return to step 1, not force its way forward. This makes AI behave like an intelligent partner, not a rigid script.

III. Apply "Quality Guardrails"

Don't allow the model complete freedom. Introduce strict constraints in the Remember or Constraints section:

- Word limit: e.g., "Present max 300 words per section"

- Socratic Method: "Ask only one question at a time"

- YAGNI: "Be ruthless in eliminating unnecessary features"

IV. Context is the Foundation

AI doesn't operate in a vacuum.

Principle: The first step of every skill (in the Understanding phase) should be checking the current project state (working directory, existing files). Only with this context can the model ask sensible questions.

Summary: Traditional Prompt vs. Skill-Based Approach

The difference is dramatic. See how work dynamics change after implementing skill-based architecture:

1. Conversation Flow

Traditional: Linear (Question -> Long Answer)

Skill-based: Iterative (Phases <-> Returns <-> Validation)

2. Structure

Traditional: Often lacking, chaotic response structure

Skill-based: Strict phases (Understanding, Exploration, Design)

3. Responsibility

Traditional: AI tries to do everything at once (and often fails)

Skill-based: AI delegates tasks to specialized sub-skills

4. Interaction

Traditional: Large blocks of text, difficult for humans to verify

Skill-based: Short interactions, small-step method

Implementing such architecture transforms the model from a "text generator" into an "active consultant" who guides you by the hand from idea to finished solution, minimizing the risk of error.

What does your workflow with LLMs look like? Do you create your own system prompts, or do you rely on the "pure" model? Let me know in the comments!