Load Balancing: What It Is and Why You Need It

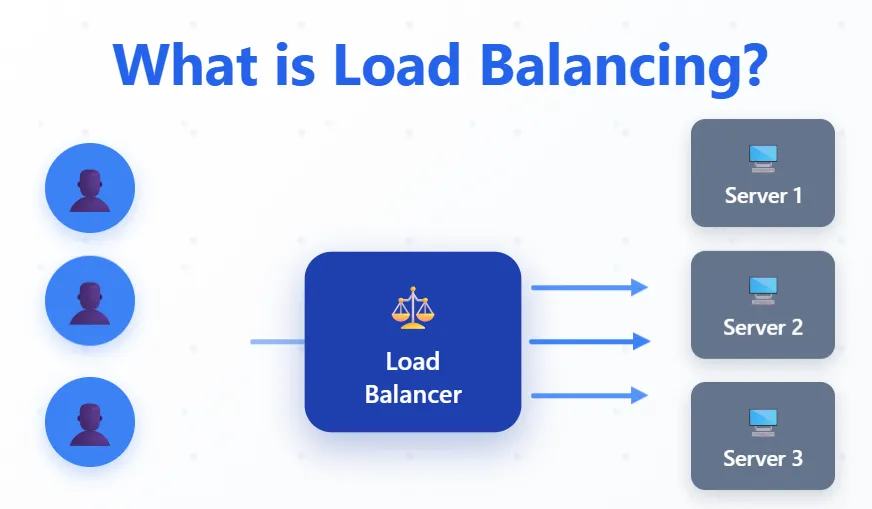

Load balancing is a technique for distributing tasks or network traffic among multiple servers/resources to improve service performance and reliability. This ensures that no single server becomes overloaded. Without a load balancer, all requests would go to one machine, quickly leading to overload. A load balancer distributes traffic evenly, reducing application response time and increasing availability.

Benefits of Load Balancing

Higher Performance: Requests are distributed across multiple servers, speeding up user service.

Greater Availability: The failure of one server doesn't cause service interruption – the remaining servers take over the traffic.

Scalability: It's easier to add new servers (e.g., in the cloud) as traffic grows.

Resource Optimization: No server sits idle, and none becomes overloaded.

Basic Types of Load Balancing

Network Load Balancing (Layer 4)

Operates at the transport level (TCP/UDP). A load balancer in this mode works like a simple router – forwarding packets to destination servers without examining the content of higher-layer protocols. This allows it to handle traffic very quickly.

Application Load Balancing (Layer 7)

Operates at the application level (e.g., HTTP, HTTPS). It analyzes request content (headers, URLs, etc.) and routes them to the most appropriate servers. For example, product queries can go to a server with an inventory database, improving service efficiency.

Traffic Distribution Methods

Load balancers use various algorithms for routing requests:

Round Robin

The simplest static algorithm that sends consecutive requests alternately to consecutive servers in the pool. For example, the first request goes to server A, the second to B, the third back to A, etc.

Least Connections

A dynamic algorithm. New requests are routed to the server with the fewest current connections, assuming it can handle new tasks fastest.

IP Hash

A static algorithm. Based on the client's IP address (or another header field), a hash is generated that always points to the same server. This enables "sticky sessions" by keeping clients connected to the same server.

Use Case Examples

Web Services (WWW, E-commerce)

Popular portals and online stores distribute HTTP traffic across multiple web servers to serve many users simultaneously.

Microservices

In microservice architecture, each service (e.g., authentication, database) can have its own load balancer distributing requests among its instances.

APIs and Cloud Applications

REST/API services route queries to groups of API servers. Load balancers work with cloud auto-scaling mechanisms (e.g., AWS Auto Scaling), ensuring elasticity and availability.

CDN (Content Delivery Networks)

Global load balancers direct users to the nearest or least loaded edge server, minimizing latency and ensuring faster content delivery.

Popular Tools and Technologies

NGINX

A popular web server often used as a load balancer (with minimal configuration, it can distribute HTTP traffic).

HAProxy

An open-source, high-performance load balancer designed for network traffic; often used in high-load services.

Apache HTTP Server (mod_proxy_balancer)

A web server with a load balancing module that supports web connections and is easy to configure.

Cloud Services (LBaaS)

Services like AWS Elastic Load Balancer (ELB) – a managed load balancing service in Amazon Web Services. Similar services operate in Azure, Google Cloud, etc.

Hardware Solutions

Professional appliances (e.g., from F5, Citrix) – typically found in large data centers and corporations.

How It Works (Example)

The load balancer operation is straightforward: a client sends a request (e.g., HTTP) to the service's public address, which reaches the load balancing device. The load balancer selects one of the available servers (e.g., using round-robin or least connections method) and forwards the request to it.

The server processes the query and returns a response through the load balancer to the client. If any server fails or becomes overloaded, the load balancer detects this (e.g., through built-in health checks) and routes traffic to the remaining operational machines.

This way, traffic is evenly distributed, and the service remains available even during failures, ensuring optimal performance and reliability for your applications.