Is Claude Code Hallucinating Again? Learn the AI Agents Architecture That Will Cure Your Terminal

Published: 2026-01-13

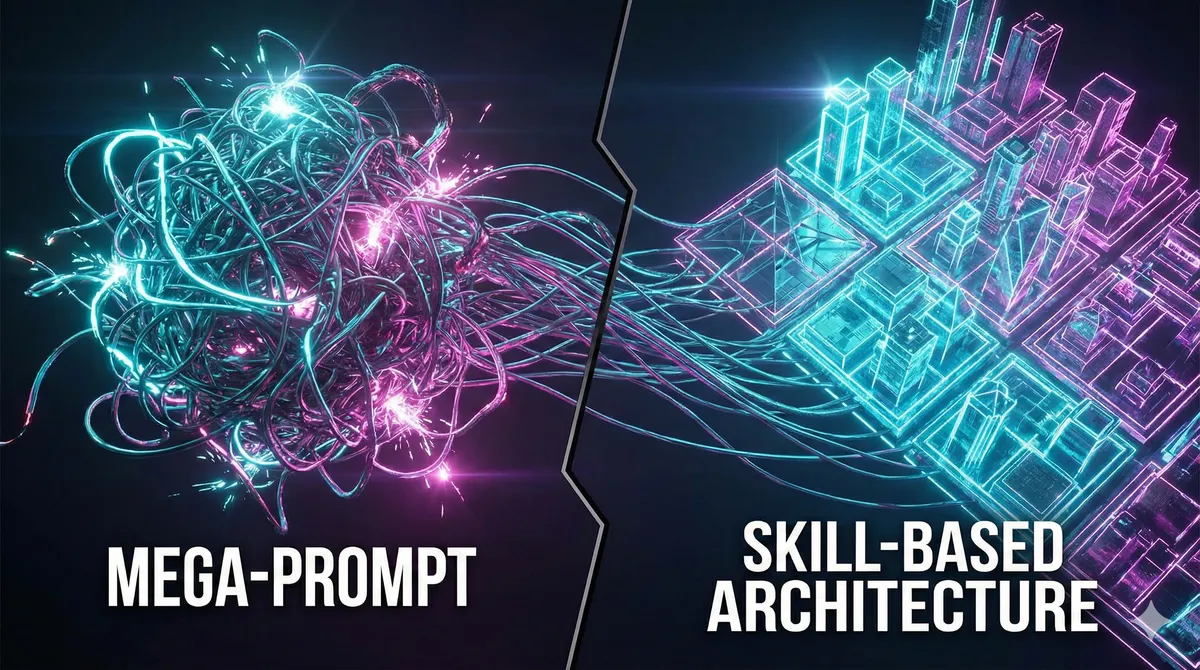

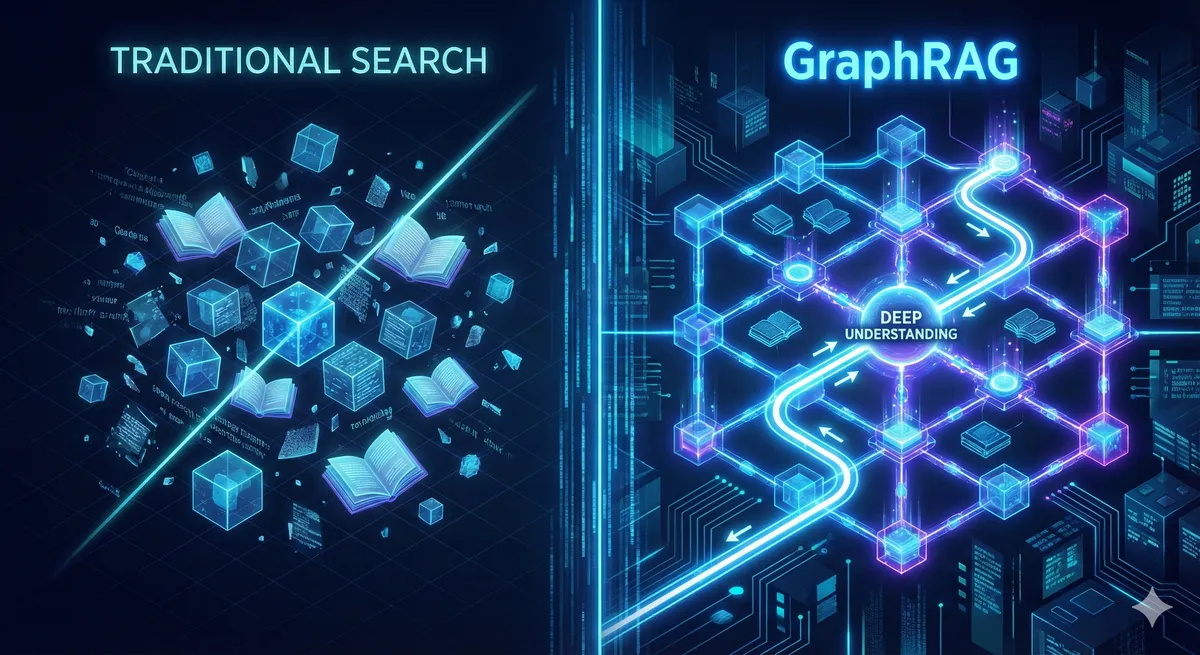

Hallucinations aren't a bug in LLM systems—they're a feature. The solution isn't waiting for the next language model, but changing your workflow. Here's how to use the MCP standard, CLAUDE.md files, and Multi-Agent strategy to transform guessing into solid engineering.